Sometimes difficult to find errors in DAG Verify DAG file is in correct folder - it must be an absolute pathĭetermine the DAGs folder via airflow.cfg Syntax errors

#PYTHON AIRFLOW FREE#

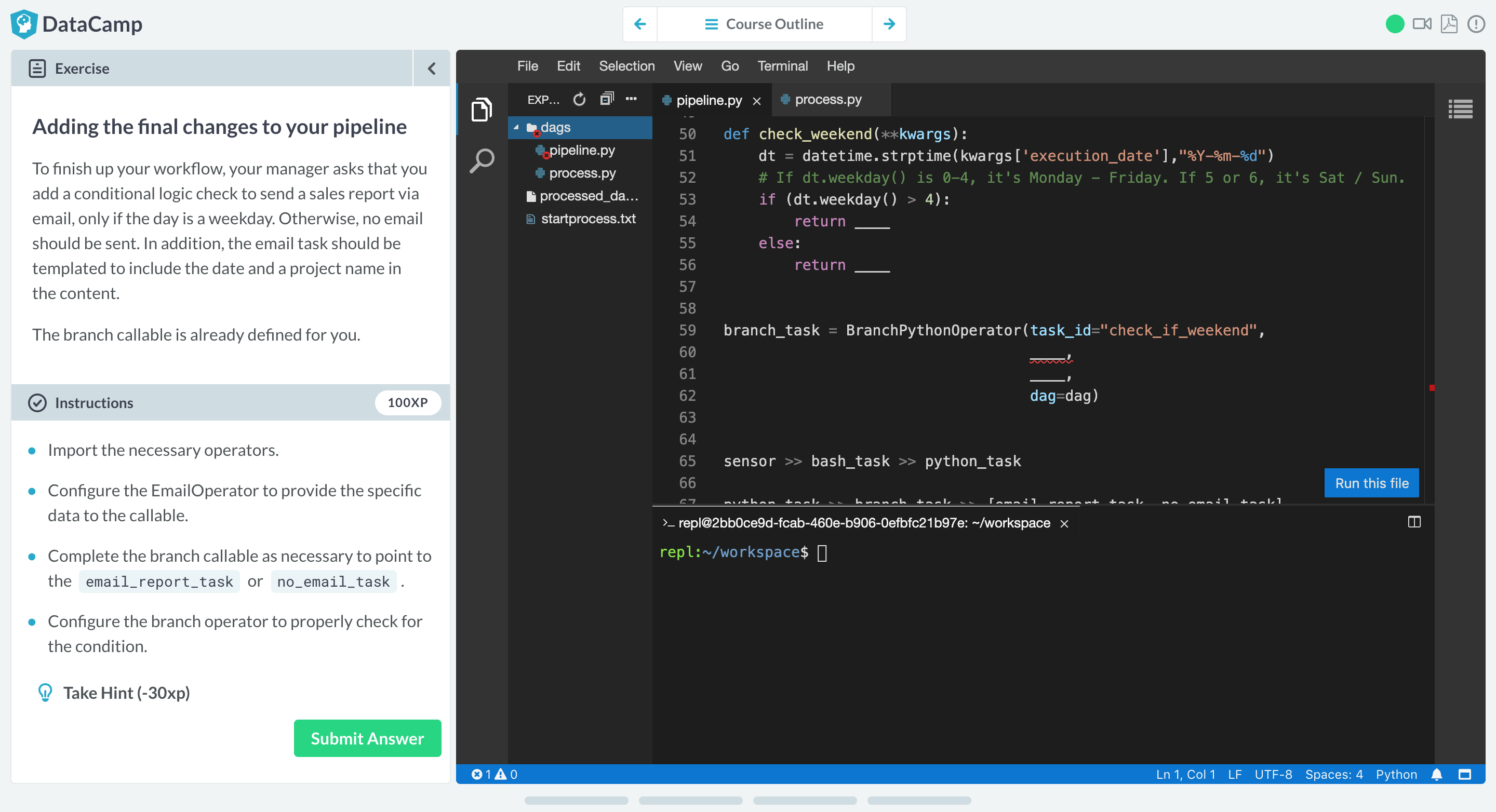

Not enough tasks free within the executor to run Modify the attributes to meet your requirements INFO - Using SequentialExecutor Debugging and troubleshooting in Airflow Typical issues.įix by running airflow scheduler from the command-lineĪt least one schedule_interval hasn't passed SequentialExecutor - the default - runs one task at a time useful for debugging while functional, not really recommended for production LocalExecutor - treats tasks as processes parallelism defined by the user can utilize all resources of a given host systemĬeleryExecutor - ]: a general queuing system written in Python that allows multiple systems to communicate as a basic cluster multiple worker systems can be defined significantly more difficult to setup and configure extremely powerful for organizations with extensive workflowsĮ.g. To add task repetition without loops ]sĭifferent executors handle running the tasks differently Many others in nsors and libraries Why sensors? SqlSensor - Runs a SQL query to check for content HttpSensor - Request a web URL and check for content In the activeĬommunity you can find plenty of helpful resources in the form ofīlogs posts, articles, conferences, books, and more.Init_sales_cleanup > file_sensor_task > generate_report Other sensorsĮxternalTaskSensor - wait for a task in another DAG to complete The open-source nature of Airflow ensures you work on components developed, tested, and used by many otherĬompanies around the world. From the interface, you can inspect logs and manage tasks, for example retrying a task in Backfilling allows you to (re-)run pipelines on historical data after making changes to your logic.Īnd the ability to rerun partial pipelines after resolving an error helps maximize efficiency.Īirflow’s user interface provides both in-depth views of pipelines and individual tasks, and an overview of Rich scheduling and execution semantics enable you to easily define complex pipelines, running at regular Tests can be written to validate functionalityĬomponents are extensible and you can build on a wide collection of existing components

Workflows can be developed by multiple people simultaneously Workflows can be stored in version control so that you can roll back to previous versions task (pythoncallable None, multipleoutputs None, kwargs) source ¶ Deprecated function that calls task.

#PYTHON AIRFLOW CODE#

Workflows are defined as Python code which If you prefer coding over clicking, Airflow is the tool for you. Start and end, and run at regular intervals, they can be programmed as an Airflow DAG. Many technologies and is easily extensible to connect with a new technology. Python API Reference DAGs The DAG is Airflow’s core model that represents a recurring workflow. The Airflow framework contains operators to connect with Other views which allow you to deep dive into the state of your workflows.Īirflow is a batch workflow orchestration platform.

These are two of the most used views in Airflow, but there are several The same structure can also beĮach column represents one DAG run. Of running a Spark job, moving data between two buckets, or sending an email. This example demonstrates a simple Bash and Python script, but these tasks can run any arbitrary code. Of the “demo” DAG is visible in the web interface: > between the tasks defines a dependency and controls in which order the tasks will be executedĪirflow evaluates this script and executes the tasks at the set interval and in the defined order. Two tasks, a BashOperator running a Bash script and a Python function defined using the decorator A DAG is Airflow’s representation of a workflow. Extensible: Easily define your own operators. From datetime import datetime from airflow import DAG from corators import task from import BashOperator # A DAG represents a workflow, a collection of tasks with DAG ( dag_id = "demo", start_date = datetime ( 2022, 1, 1 ), schedule = "0 0 * * *" ) as dag : # Tasks are represented as operators hello = BashOperator ( task_id = "hello", bash_command = "echo hello" ) () def airflow (): print ( "airflow" ) # Set dependencies between tasks hello > airflow ()Ī DAG named “demo”, starting on Jan 1st 2022 and running once a day. Dynamic: Airflow pipelines are configuration as code (Python), allowing for dynamic pipeline generation.

0 kommentar(er)

0 kommentar(er)